Through the series, we’ll go the whole way, from describing what continuous delivery is, through what tools to use and why, to creating your development environment, and all the way to automating your delivery and collating metrics.

Any examples will be by reference to a scale-able REST JSON public service API and the problems and issues we’ve had to solve along the way.

PICTURES PLEASE

You might be using continuous integration in your workplace or at home, and it may be something you are familiar with.Chances are CI works really well within your team, and on your project, but doesn’t work so well across all projects, and probably sucks between departments, particularly as your development moves towards being released in front of customers.

Software development paradoxically is an industry where the standard production line to deployment becomes progressively more manual the closer you get to the customers.

This is such a waste of time and money.

There are some great books on the topic of CI and its bigger and bolder brother Continuous Delivery which attempts to address the problem above. Here we’ll pick out Jez Humble and David Farley’s epic Continuous Delivery for background reading, but as with most things, you haven’t got time, its a big topic, so we’ve tried to explain it in pictures.

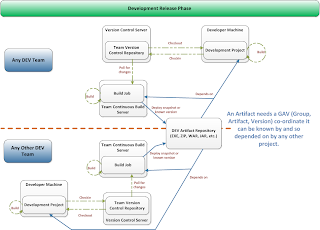

development RELEASE phase

Here is a picture for an idealised development release phase for a complicated project with lots of technologies.

On a complicated project, there may be many different parts to the overall project. The process may differ from team to team and technology to technology, but a few key pieces can be observed in any process and for any technology that need to be common:

- All pieces of a complicated project should be under version control, hence the team version control repository

- Each piece of the complicated project should be built centrally on the team’s continuous build server so all changes get batched up and built as part of the normal process of continuous build or continuous integration (see Continuous Integration)

- Once successfully built, the artifact (JAR, EXE, ZIP, GEM, RPM, whatever) that is the product of a build should be deployed to an artifact repository that is visible to all teams under a known set of GAV co-ordinates (Group, Artifact, Version) so that something further downstream (closer to being in front of a customer) can depend on (so download) the built artifact as part of a larger whole.

This is where the second picture comes in.

Product release phase

Any moderately complicated solution is going to be made up of many different parts, software, hardware, configuration, and supporting 3rd party applications.What often happens if you work in a place where there is a separation between development and any other part of the business (a.k.a the usual hell), is your artifact that is only a small part of the overall solution falls off the end of the development conveyor belt, crashes to the floor, and is at some indeterminate point in the future is swept up and taken on by people who may have never seen it before, and have no idea what to do with it.

Sound familiar?

The result of this lack of departmental collectivism is as a general rule, chaos, panic, bad blood, all of the above, particularly as product release time nears.

So things need to be joined up don’t they?

Here is a picture of what could happen after the development release phase of each component part of a solution or product:

The solution or product is made of constituent parts at known versions. This bundle of parts can each be pulled from the DEV artifact repository, and can be expressed as a file with a known version of its own (a Maven POM anyone?) so long as that file is accessible to external systems by GAV co-ordinates too.

This bundle moves through certain stages towards delivery. These are the stages in a deployment pipeline, such as automated acceptance testing, automated performance testing, durability testing, whatever stages you (as you are in control aren’t you?) want to include in your pipeline.

Note a few observations can be made here:

- If DEV don’t become more OPS, then you are in trouble.

- If OPS don’t become more DEV, then you are in trouble.

- The Product Artifact Repository needn’t be the same as the DEV Artifact Repository. The Product Artifact Repository refers to bundles (expressed as products) that move down a pipeline, so if we need to get pointy-headed about security, we can do it here if we so wish

- To move through a stage in the pipeline, a bundle gets deployed, provisioned, and tested on a representative deployment environment using the tests that “fit” with that stage. If it passes, it gets moved down the pipeline, otherwise it doesn’t.

- Moving down a pipeline could mean many things, logically promoting a given bundle to a new state following completion, actually copying bundles of stuff from one location to another (I don’t recommend this), doing a combination of the both.

- Eventually the bundle makes it to a staging environment, and its done everything but go live. You do canary releasing right, and you’ve got in-flight upgrades sorted right? No? Never mind we’ll cover those in later posts, as ideally, you would want to automate your migration from an old version of your solution to a new version of your solution, all the way to live, without your customer even noticing it.

- So, now we are live. What if no-one wants the solution? What do you mean you’ve got no metrics that tell you what is used, and if its used? Are you used to burning money? A principal aim of Continuous Delivery is, do it regularly and do it in small increments. So, if the real metrics tell you no-one wants your brilliant new or planned feature, then its not a fiasco, and don’t keep it, bin it. But bin it on the basis of actual user feedback not on the basis of imagined need.

Conclusion

Hopefully the pictures set the scene enough to begin the conversion within your own organisation, and set the scene for the posts to follow.In our opinion, the biggest two obstacles to the widespread adoption of Continuous Delivery are politics, and short-termism.

If you aren’t doing Continuous Delivery already, you can bet your life either your competition will be, or are currently thinking of doing it soon. So get a move on before your politics and short-termism kill you as a competitive business.

In the following series of blog posts we’ll investigate what it all means, and how you actually do it.

Enjoy.

The next blog post will be on the basic toolkit we found we needed and why …